Probleemstelling:

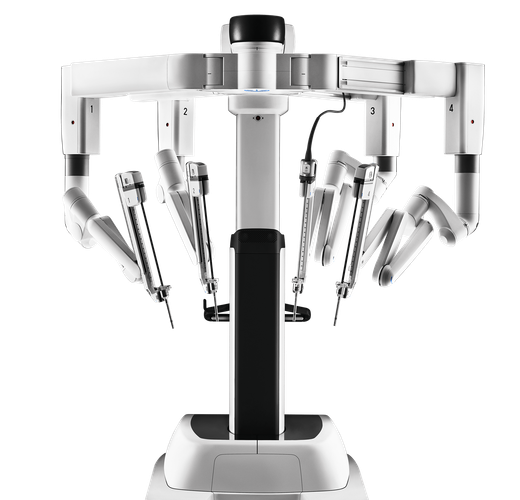

Prostate cancer is the second most common cancer in males. Treatment includes surgical removal of the prostate gland, which is often performed in a minimal-invasive way through robotic surgery.

Orsi academy is the world's largest training centre in robotic surgery and has a broad expertise in minimal invasive surgery. As such Orsi has developed and validated a state-of-the art training methodology called "Proficience Based Progression" in which surgeons are trained to proficiency before they can start real life surgery.

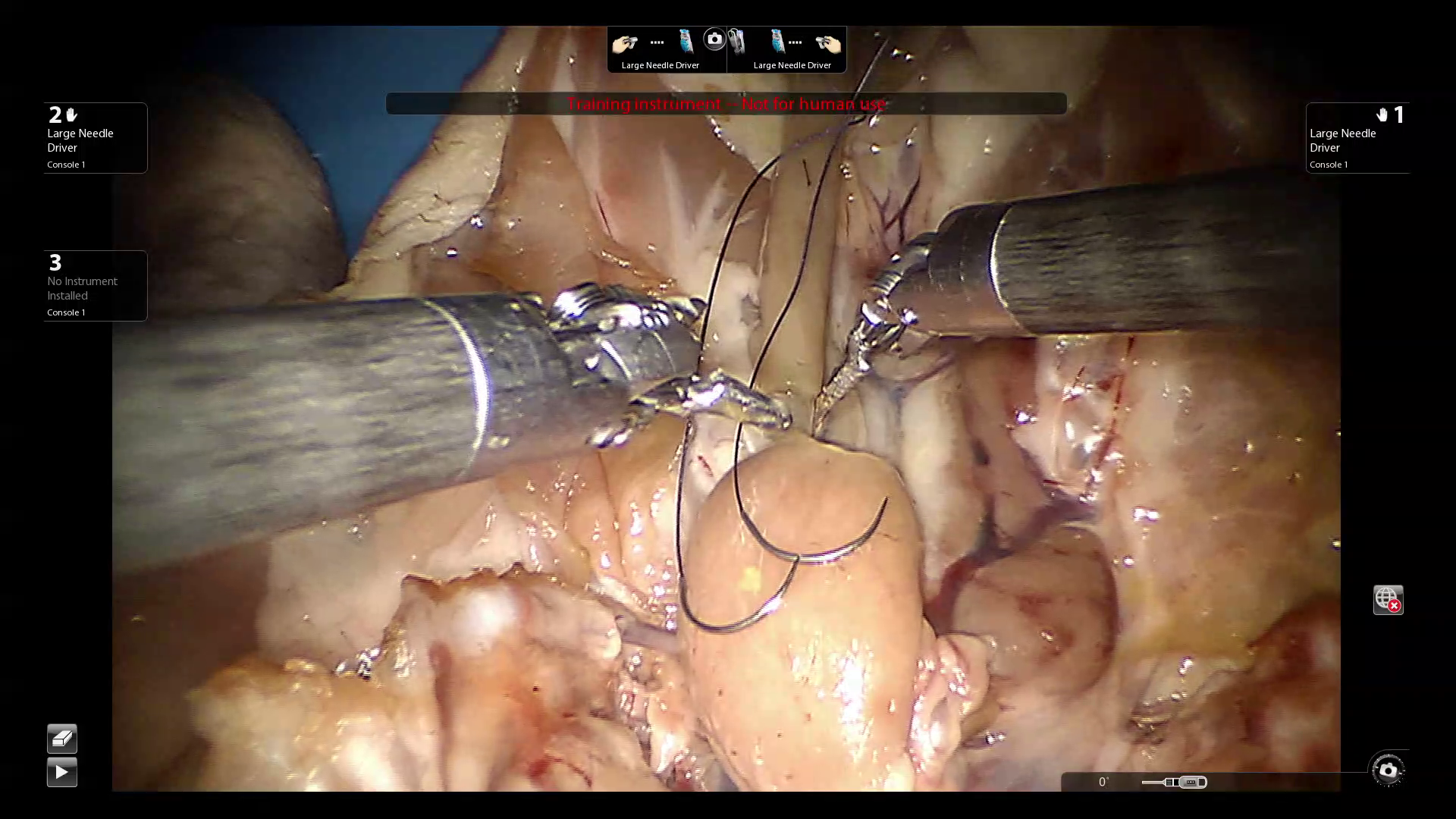

The specific metrics used in this methodology objectivate surgical performance through a standardised approach. However, this assesment is done purely manual by experienced surgeons. Current computer vision methods only allow for basic disctinctions in scoring allowing basic scales such as "novice - intermediate - expert" and are rarely trained on real life images due to a lack of qualitative data.

It is evident that this approach is inaccurate to provide certification.

Hence, Orsi academy is heavily involved in automating this type of assessment through computer vision techniques such as deep learning. As mentioned earlier, one major bottleneck in this rapidly developing field is the lack of qualitative data.

However, due to it's central teaching role in the robotic surgery landscape, Orsi has very large labeled and unlabeled datasets in house to apply new computer vision techniques, unparalleled in current literature.